What is Machine Learning?

Machine Learning is a subset of Artificial Intelligence (AI) focused on learning from given data, categorizing it, and generalizing predictions.

Core of Machine Learning is made from variety of algorithms being fed with training data to improve itself in identifying and making prognosis. Those trained algorithms we call Machine Learning models. These models are not programmed from scratch to accommodate different data inputs, but have ability to categorize different assets of data or identify patterns in them without much of a human intervention – which will continue to improve with algorithms being fed with data over time.

Machine Learning (ML) is strongly connected to data but it is not supposed to be only numeric data e.g. finding trends in people going to hospitals in connection to time of the year. We can see it being used all around us. One of the most prominent usages of ML is in recommendation engines suggesting clients what new show they can watch and it may be to their liking, based on their history of watched series found on popular streaming services or photographed object identification.

ML models’ adaptive abilities are based on datasets, rather than programming.

We can distinguish 3 main techniques required to train Machine Learning models that differ in level of data description:

-

Supervised learning:

- Usually its data is strictly labeled which determines it’s meaning. Known example is flower identification and classification. Feeding model with train datasets of its petals, anthers, sepals or even labeled photos algorithms start to create categories and assign certain values to those. Models themselves find conditions of each category and are ready to associate future data with them. In case of infinite feed of info to model we can observe regression – correlation between certain values in data influencing categorization.

- Overfitting is a possible to occur problem. Model recognizes patterns in train data but those patterns may not be applicable in wider set. To protect model against overfitting you can use some unforeseen or unlabeled data.

-

Unsupervised learning:

- Like in supervised learning this type searches for connection between variables but it has no labels nor tags. Large volumes of data are looked through for patterns without context. It can be used as a first step to later on label them before supervised learning process.

-

Reinforcement learning:

- Model is not trained on sample data but it offers learning capabilities through trial and error, it is a behavioral learning model. It can correct sequence of actions taken thanks to feedback from analysis. Algorithm needs to find an association between goal and actions benefiting quicker or more detailed problem solving. Reinforcement learning can be compared to teaching young puppy commands and giving it treats every time it succeeds.

History of Machine Learning:

The foundations of machine learning were laid in the 1940s and 1950s, with the development of early computing machines and the emergence of the field of artificial intelligence. Researchers like most noticibly Alan Turing began exploring concepts related to learning and adaptation in machines. During next decade researchers such as Arthur Samuel began experimenting with machine learning algorithms, particularly in the context of game playing. Samuel's work on the development of a self-learning checkers program marked one of the earliest applications of machine learning.

1956: Dartmouth Conference - The Dartmouth Conference, organized by John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon, is considered the birth of the field of artificial intelligence. While machine learning was not the sole focus of the conference, it laid the groundwork for research in AI and machine learning.

During following years AI research predominantly focused on symbolic approaches, such as expert systems and rule-based reasoning. These systems relied on handcrafted rules and knowledge representations rather than learning from data. The resurgence of interest in neural networks and connectionist approaches in the 1980s marked a significant milestone in the history of machine learning. Researchers such as Geoffrey Hinton, Yann LeCun, and Yoshua Bengio made pioneering contributions to the development of neural network models and learning algorithms.

In 1980s-1990s researchers developed and refined various machine learning algorithms, including decision trees, Bayesian networks, support vector machines, and ensemble methods. The field witnessed advancements in both supervised and unsupervised learning techniques. Soon "Rise of Data Mining and Big Data" happened. With the proliferation of digital data and the advent of data mining technologies, machine learning gained traction in applications such as data analysis, pattern recognition, and predictive modeling. Researchers explored techniques for handling large datasets and extracting actionable insights from data.

Since 2000 the emergence of deep learning, fueled by advances in neural network architectures, optimization algorithms, and computational resources, has revolutionized the field of machine learning. Deep learning models have achieved remarkable success in areas such as image recognition, natural language processing, and speech recognition. Additionally, the advent of big data technologies has enabled the processing and analysis of massive datasets, further driving advancements in machine learning and AI.

Different algorithms used in Machine Learning:

Some are more tuned to organize data and some are suited to predict values or categories. Some of the most commons are:

-

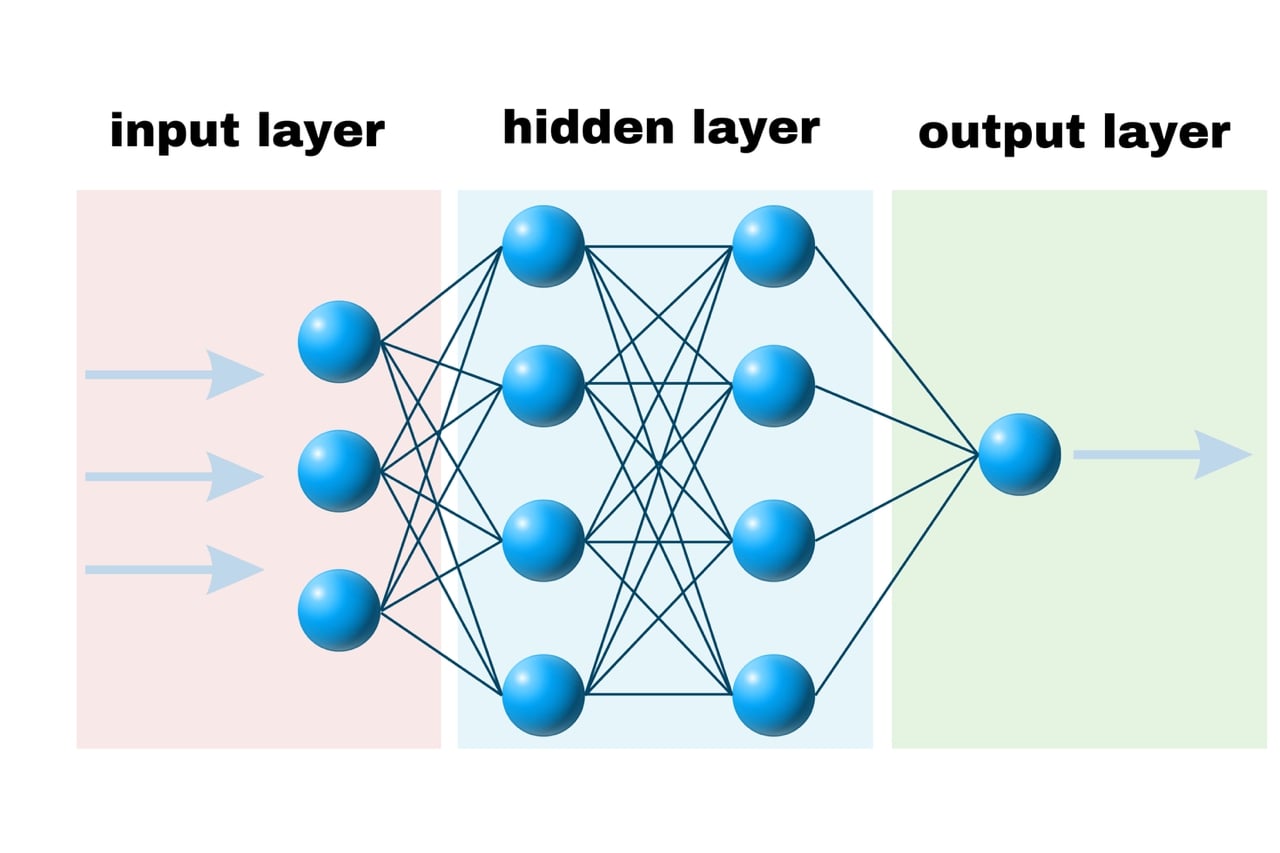

Neural network

- Probably most famous because of tries to simulate how we, or more precise, our minds work. It consists of huge number of nodes connected to each other in at least three layers. Thanks to this design it can be trained to deal with abstractions or undefined tasks, all that thanks to thousands or even millions of small nodes making modifications to this data connected to each other, processing input information layer by layer. More complex tasks can be assigned to Deep Learning technique using notably more hidden layers inside a model.

-

Bayesian

- Those algorithms can be pre-modified for having bias to some variables. That means model is not fully dependable on dataset. It comes handy when there is not enough available data to properly train model or data scientist have knowledge on subject and want to make model take some variables into more consideration.

-

Decision tree

- Decisions of algorithm are represented in branching formation. Each choice of a model is represented with values assigned to node based on possibility of the outcome happening. Each node may connect to next possible choice forming structure familiar to roots of a tree. Decision trees are mostly used in decision analysis of operation research helping to identify most possible to succeed strategy.

-

Clustering

- It is unsupervised strategy of model groups similar unlabeled parameters in clusters. Those data parameters may come from social media profile data, customer purchases or movies information. Algorithm then will segregate those parameters into groups making it easier to create separate strategies for each group.

-

Linear regression

- Regression algorithms are bread and butter of Machine Learning for data scientists and analysts as well being one of most used. Regression algorithms help you measure degree of correlation between variables and data set or even assess future data from original values.

- Important note is you must understand data set and context to not get tricked by faulty output. That possible error is caused by model binding cause to correlation.

4 Pros and Cons of ML:

Machine Learning has numerous advantages, but it also comes with its share of disadvantages. Here's an overview:

-

Advantages:

-

Automation - ML algorithms can automate repetitive tasks and processes, saving time and reducing manual effort.

-

Scalability - ML models can scale to handle large datasets and complex problems efficiently, making them suitable for big data analytics.

-

Personalization - ML algorithms can analyze data to provide personalized recommendations and experiences, enhancing user satisfaction.

-

Predictive Analytics - ML enables predictive analytics, allowing businesses to forecast future trends, behaviors, and outcomes based on historical data.

-

-

Disadventages:

-

Data Dependency ML models require large amounts of high-quality data for training, and the quality of predictions is highly dependent on the quality and quantity of the data.

-

Bias and Fairness - ML models can inherit biases from the data they are trained on, leading to unfair or discriminatory outcomes, if not carefully addressed.

-

Interpretability - Deep learning models, in particular, can be highly complex and difficult to interpret, making it challenging to understand the reasoning behind their predictions.

-

Overfitting - ML models can overfit to the training data, capturing noise or irrelevant patterns, which can lead to poor generalization performance on unseen data.

-

Literature:

There are numerous excellent resources on Machine Learning (ML) that cover a wide range of topics from beginner to advanced levels. Here are some of the best articles, reports, and books:

-

"A Few Useful Things to Know About Machine Learning" by Pedro Domingos - This paper provides insightful tips and perspectives on various aspects of Machine Learning.

-

"Understanding Machine Learning: From Theory to Algorithms" by Shai Shalev-Shwartz and Shai Ben-David - This article covers fundamental concepts in Machine Learning theory and algorithms.

-

"Deep Learning" by Yann LeCun, Yoshua Bengio, and Geoffrey Hinton - A comprehensive overview of deep learning techniques, particularly neural networks.

-

"The AI Index Report" - Produced by the Stanford Institute for Human-Centered AI, this report provides data-driven insights into the progress and trends in artificial intelligence.

-

"Pattern Recognition and Machine Learning" by Christopher M. Bishop - This book provides a comprehensive introduction to Machine Learning, covering both classical and modern techniques.

-

"Deep Learning" by Ian Goodfellow, Yoshua Bengio, and Aaron Courville - A highly regarded book on deep learning, covering both theoretical foundations and practical applications.

-

"Machine Learning Yearning" by Andrew Ng - This book offers practical advice and best practices for building and deploying Machine Learning systems in real-world scenarios.

These resources cover a wide range of topics within Machine Learning, from theoretical foundations to practical applications and industry insights.

Conclusions:

In summary, Machine Learning is a subfield of artificial intelligence that empowers computers to learn from data and make predictions or decisions without explicit programming. ML algorithms iteratively improve their performance on a specific task by learning from examples, allowing them to generalize and make predictions on new, unseen data.

ML finds applications across various domains, including natural language processing, computer vision, healthcare, finance, and autonomous vehicles, driving innovation and advancements in technology. Overall, Machine Learning continues to revolutionize industries, enabling intelligent decision-making, automation, and personalized experiences.

MLJAR Glossary

Learn more about data science world

- What is Artificial Intelligence?

- What is AutoML?

- What is Binary Classification?

- What is Business Intelligence?

- What is CatBoost?

- What is Clustering?

- What is Data Engineer?

- What is Data Science?

- What is DataFrame?

- What is Decision Tree?

- What is Ensemble Learning?

- What is Gradient Boosting Machine (GBM)?

- What is Hyperparameter Tuning?

- What is IPYNB?

- What is Jupyter Notebook?

- What is LightGBM?

- What is Machine Learning Pipeline?

- What is Machine Learning?

- What is Parquet File?

- What is Python Package Manager?

- What is Python Package?

- What is Python Pandas?

- What is Python Virtual Environment?

- What is Random Forest?

- What is Regression?

- What is SVM?

- What is Time Series Analysis?

- What is XGBoost?