AutoML Example Projects: A Guide with 10 Popular Datasets

Automated Machine Learning (AutoML) helps create machine learning models without doing everything manually. Normally, data scientists have to pick algorithms, adjust settings, and test results many times to build a good model. This process takes a lot of time. AutoML automates these steps, making it faster and easier to create high-quality models. It also allows people without deep technical knowledge to use AI.

In this article, I will introduce 10 popular datasets that you can use for machine learning. These datasets cover different types of tasks, including binary classification, multiclass classification, and regression. To train models on these datasets, I will use mljar-supervised, an open-source AutoML tool that I created. This tool works in Python and makes it easy to train and evaluate machine learning models. It automatically prepares data, selects the best algorithms, tunes hyperparameters, and generates documentation for each model.

To make AutoML even more accessible to use, I built a desktop app called MLJAR Studio. It is based on JupyterLab and comes with features that improve working with Python notebooks. In this article, I will show both Python code and screenshots from MLJAR Studio to explain how it works.

MLJAR Studio has a no-code extension called Piece-of-Code, which lets you create Python code using a graphical interface. I will use Piece-of-Code to generate a full Python notebook that trains an AutoML model on the Adult Income dataset. The same approach can be used for all the datasets in this article.

First, I will give a short description of each dataset. Then, I will guide you step by step on how to build a machine learning pipeline using mljar-supervised and MLJAR Studio.

You can find all the datasets mentioned in this article in my GitHub repository: pplonski/datasets-for-start. This repository contains curated datasets from various sources, specifically selected for people who would like to improve their machine learning skills. The datasets are ready to use, making them great for learning data analysis, classification, and regressio. Feel free to browse, download, and experiment with different datasets.

Table of contents:

- Binary Classification - Adults Income Dataset

- Binary Classification - Breast Cancer Dataset

- Binary Classification - Credit Scoring Dataset

- Binary Classification - Employee Attrition Dataset

- Binary Classification - Pima Indians Diabetes Dataset

- Binary Classification - SPECT Heart Dataset

- Binary Classification - Titanic Dataset

- Multiclass Classification - Iris Dataset

- Multiclass Classification - Wine Dataset

- Regression - House Prices Dataset

AutoML Implementation Steps:

- AutoML Get Started

- Load Dataset

- Split Data into Train and Test

- Select Features and Target

- Train AutoML Model

- Make Predictions on Test data

1. Adult Income Dataset

The Census Income Dataset (Adult Dataset) is used to predict whether an individual's annual income exceeds $50K based on demographic and employment data. It contains 32,561 samples with 15 attributes, including age, education, occupation, and hours worked per week. The target column is "income", a binary classification (<=50K or >50K). The dataset requires preprocessing, including handling missing values, converting categorical features, and scaling numerical attributes. It is widely used for classification tasks in machine learning. Common models applied to this dataset include logistic regression, decision trees, and neural networks.

The Census Income Dataset is often used in fairness research because it highlights biases in income prediction. There is a strong dependency in the data showing that females tend to earn less than males, which can lead to biased model predictions. This makes the dataset useful for studying algorithmic fairness, bias mitigation, and ethical AI practices. Researchers analyze how machine learning models trained on this data may amplify societal biases and explore techniques to ensure fair and unbiased decision-making.

2. Breast Cancer Dataset

The Breast Cancer Wisconsin dataset is used to classify whether a breast tumor is malignant or benign based on cell nucleus characteristics. It contains 569 samples with 32 columns, including an "id" column that should be excluded from analysis as it has no predictive power. The target column is "diagnosis". Features are extracted from digitized images of fine needle aspirates (FNA) of breast masses, describing various properties of the cell nuclei. The dataset has been used in research involving classification techniques, including decision trees and linear programming-based methods. I am super happy that we can use machine learning to help improve our health!

3. Credit Scoring Dataset

The Give Me Some Credit dataset is used to predict the probability that an individual will experience financial distress within the next two years. It contains 150,000 samples with 12 attributes, including an "Id" column that should be excluded from analysis. The target column is "SeriousDlqin2yrs", indicating whether a person had serious delinquency in loan payments. Credit scoring algorithms use this type of data to estimate the likelihood of loan default, helping banks decide who gets financing and on what terms. This dataset has been used in machine learning competitions to improve credit risk modeling. The goal is to create better models that help borrowers make informed financial decisions.

4. Employee Attrition Dataset

The Employee Attrition dataset is designed to analyze factors influencing employee turnover in a company. It contains 1,470 samples with 35 attributes, and the target column is "Attrition", indicating whether an employee has left the company. The dataset requires preprocessing, including converting categorical variables and applying feature scaling. A train/test split is already provided, with 1,200 samples in the training set and 270 samples in the test set, so no additional data splitting is needed. It is a fictional dataset created by IBM data scientists and allows for insightful analysis, such as comparing attrition rates by job role, income, and work-life balance. This dataset is commonly used for HR analytics and machine learning exercises in workforce retention modeling.

5. Pima Indians Diabetes Dataset

The Pima Indians Diabetes dataset is used to predict whether a patient has diabetes based on medical diagnostic measurements. It contains 768 samples with 9 attributes, where the target column is "Outcome", a binary variable with values 0 (no diabetes) and 1 (diabetes). The dataset originates from the National Institute of Diabetes and Digestive and Kidney Diseases and includes only female patients at least 21 years old of Pima Indian heritage. Predictor variables include number of pregnancies, BMI, insulin level, and age, among others. This dataset is widely used in medical research and machine learning for classification tasks. The goal is to develop a model that can accurately diagnose diabetes based on the given features. Next example of using machine learing to improve our health.

6. SPECT Heart Dataset

The SPECT Heart Dataset is used for diagnosing heart conditions based on Single Proton Emission Computed Tomography (SPECT) images. It contains a train dataset with 80 samples and a test dataset with 187 samples, both having 23 attributes. The target column is "diagnosis", classifying patients as either normal (0) or abnormal (1) based on extracted features from SPECT images. The dataset consists of binary features derived from the original imaging data, making it suitable for testing machine learning algorithms. The CLIP3 algorithm, applied to this dataset, achieved 84% accuracy compared to cardiologists' diagnoses. Machine learning models trained on this data can support doctors in improving heart disease detection and diagnosis efficiency.

7. Titanic Dataset

The Titanic Dataset is used to predict passenger survival based on various features such as age, gender, and ticket class. It contains a train dataset with 891 samples and 12 attributes, and a test dataset with 418 samples. The target column is "Survived", a binary variable indicating whether a passenger survived (1) or not (0). The column "PassengerId" should be excluded from analysis as it has no predictive power. This dataset is widely used for learning classification models and feature engineering techniques in machine learning.

8. Iris Dataset

The Iris Dataset is a classic dataset used for classification tasks in machine learning. It contains 150 samples with 4 attributes: sepal length, sepal width, petal length, and petal width. The target column is "species", which classifies each sample into one of three flower species: Setosa, Versicolor, or Virginica. This dataset is well-balanced, with 50 samples for each species. It is widely used for testing classification algorithms, feature selection, and data visualization techniques. The Iris dataset is simple yet effective for learning fundamental machine learning concepts.

9. Wine Dataset

The Wine Dataset is used for multiclass classification to determine the origin of wines based on chemical analysis. It contains 178 samples with 14 columns, where the first column "class" serves as the target variable, categorizing wines into three different classes. The dataset includes features such as alcohol content, malic acid, ash, and flavonoids, which help distinguish between wine types.

The Housing Dataset is used for regression tasks to predict house prices based on various features. It contains 506 samples with 14 attributes, where the target column is "MEDV", representing the median house value in thousands of dollars. The dataset includes factors such as crime rate, average number of rooms per dwelling, and accessibility to highways. It is commonly used to test regression algorithms, feature engineering techniques, and model evaluation metrics like mean squared error. The dataset provides a good real-world example of predicting continuous values. It is widely used in machine learning for housing price prediction models.

10. House Prices Dataset

The House Prices Dataset is used for regression tasks to predict property sale prices based on various housing attributes. It contains 1,460 samples with 81 attributes, where the target column is "SalePrice", representing the house's final selling price in dollars. The dataset includes features such as lot size, building type, overall quality, year built, number of rooms, and neighborhood location. The "Id" column should be excluded from training as it has no predictive value. The dataset provides train and test splits, allowing easy model evaluation. It is widely used for real estate price prediction and testing regression algorithms.

A similar dataset, Boston Housing, was historically used in machine learning examples, but it is no longer recommended due to bias. One of its features, racial composition of neighborhoods, introduced ethical concerns regarding fairness in housing predictions. As a result, modern datasets like the House Prices dataset are preferred for real estate modeling without these biases.

AutoML Get Started

Getting started with AutoML is easy, and I will be using mljar-supervised for automating the machine learning workflow. I will run it in Explain mode, which trains multiple models, including Baseline, Linear, Decision Tree, Random Forest, XGBoost, Neural Network, and an Ensemble. The Baseline model serves as a simple benchmark by predicting the most frequent class. I will show how to use Python code to run AutoML and demonstrate how it looks in MLJAR Studio, which provides a graphical user interface for an interactive and user-friendly experience. This approach makes machine learning more accessible, allowing you to focus on insights rather than manual model tuning.

Load dataset

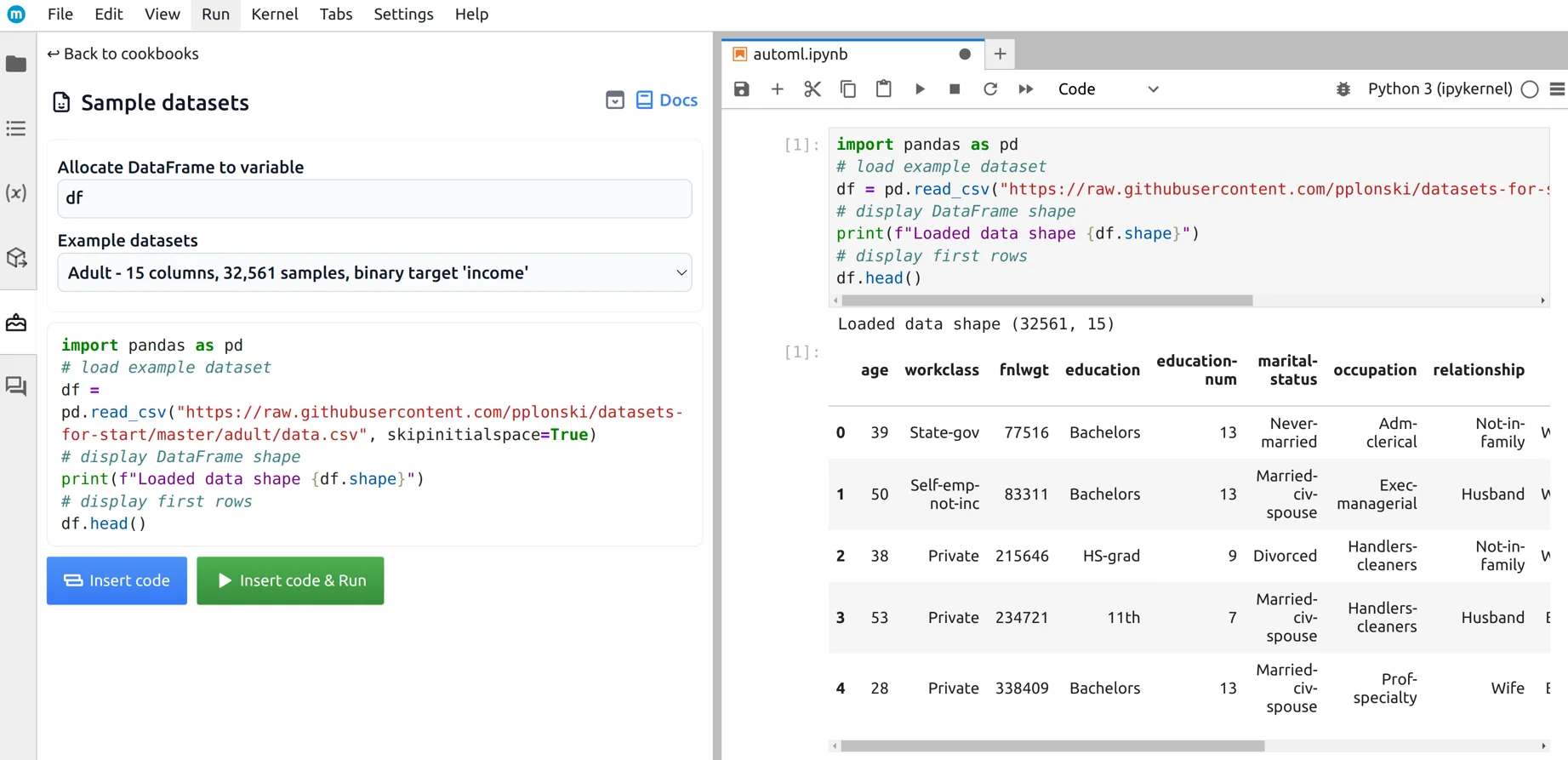

The first step is loading the dataset into a Pandas DataFrame. I will use the Adult Census Income dataset, which is commonly used for classification tasks. The code below loads the dataset from a URL, prints its shape, and displays the first few rows.

import pandas as pd # load example dataset df = pd.read_csv("https://raw.githubusercontent.com/pplonski/datasets-for-start/master/adult/data.csv", skipinitialspace=True) # display DataFrame shape print(f"Loaded data shape {df.shape}") # display first rows df.head()

In the Piece-of-Code extension, you can see that df is the name of the DataFrame, and Adult is the selected dataset. All datasets described in this article are available in the Sample datasets recipe, making it easy to load and explore them. You can learn more about this recipe in the Sample datasets documentation on our website. Yes, our documentation is interactive as well :)

AutoML split data into train and test

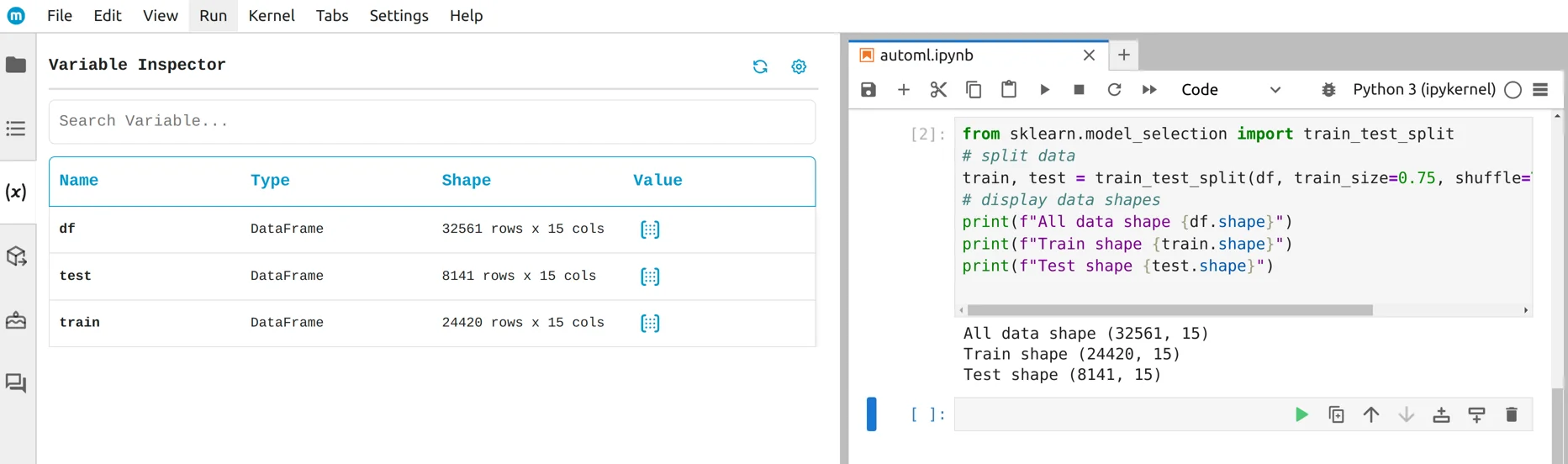

I split the data into training and testing sets using the train test split recipe from the Data Wrangling Cookbook. I allocated 75% of the data for training and the remaining 25% for testing. The code below performs the split and prints the shapes of the resulting datasets.

from sklearn.model_selection import train_test_split # split data train, test = train_test_split(df, train_size=0.75, shuffle=True, random_state=42) # display data shapes print(f"All data shape {df.shape}") print(f"Train shape {train.shape}") print(f"Test shape {test.shape}")

If your dataset is already split into train and test, you can skip this step.

You can check your data shapes in the Variable Inspector which is available in the MLJAR Studio.

AutoML select X and y

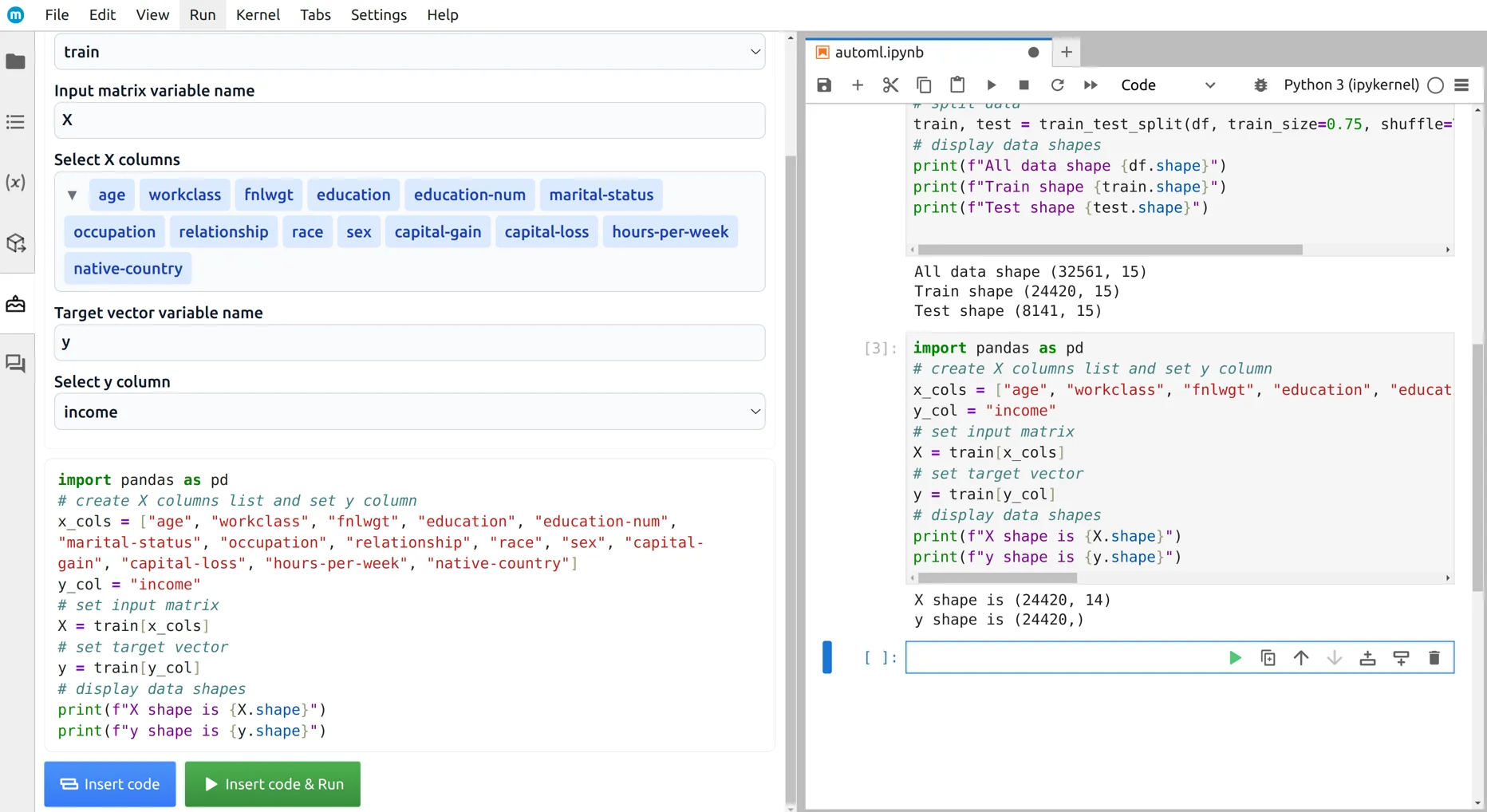

After splitting the data, the next step is selecting input features (X) and the target variable (y). I used a predefined list of feature columns while setting "income" as the target. The code below extracts X and y from the training set and prints their shapes.

import pandas as pd # create X columns list and set y column x_cols = ["age", "workclass", "fnlwgt", "education", "education-num", "marital-status", "occupation", "relationship", "race", "sex", "capital-gain", "capital-loss", "hours-per-week", "native-country"] y_col = "income" # set input matrix X = train[x_cols] # set target vector y = train[y_col] # display data shapes print(f"X shape is {X.shape}") print(f"y shape is {y.shape}")

If your dataset contains an "id" column, please remember to remove it from analysis, as it has no predictive value. You can find more details in the Select X and Y documentation.

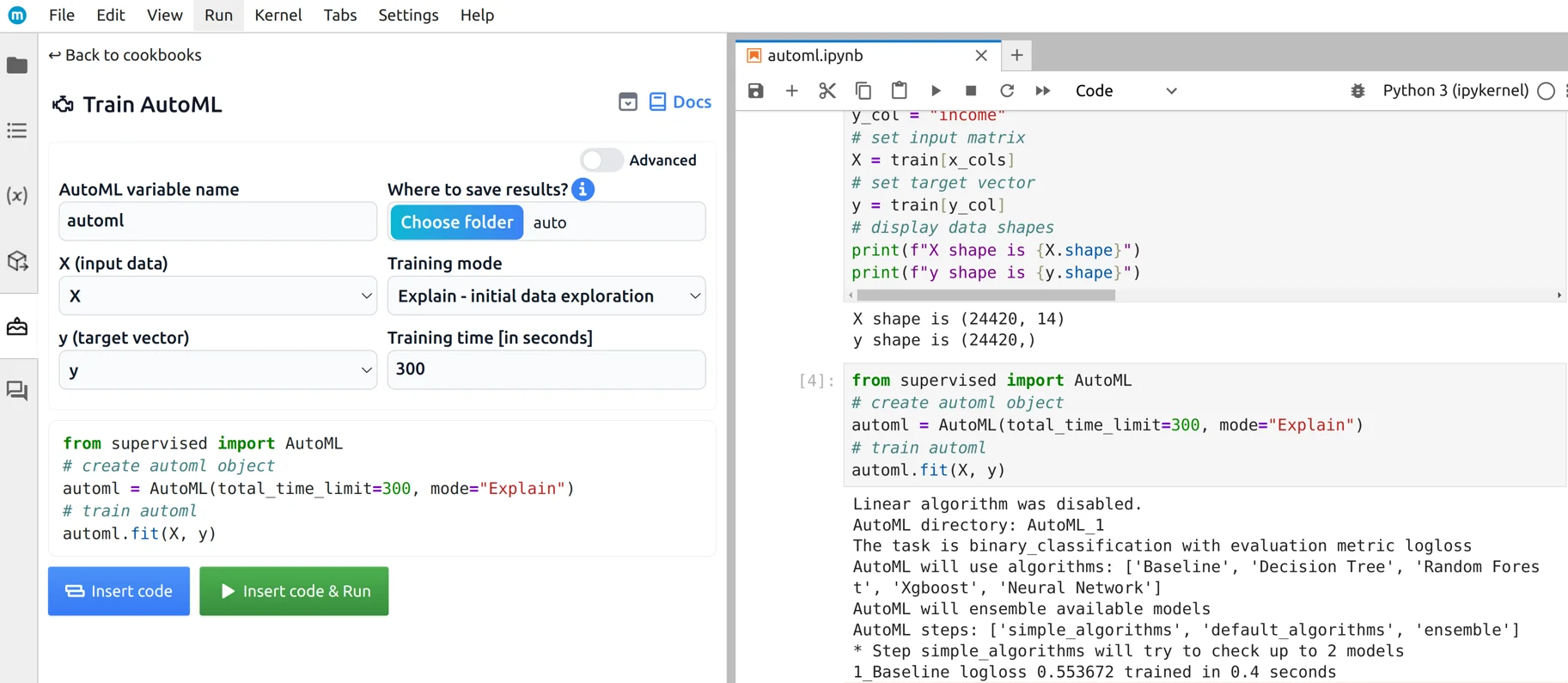

AutoML training

Now it's time to train the AutoML model using mljar-supervised. I created an AutoML object with a total training time limit of 300 seconds and used the "Explain" mode, which trains multiple models, including Baseline, Linear, Decision Tree, Random Forest, XGBoost, Neural Network, and an Ensemble. The following code trains AutoML on the selected features (X) and target variable (y):

from supervised import AutoML # create automl object automl = AutoML(total_time_limit=300, mode="Explain") # train automl automl.fit(X, y)

This step automates model selection, hyperparameter tuning, and evaluation, making it easy to get high-quality results with minimal effort. MLJAR Studio provides a graphical interface for training AutoML, please check AutoML cookbook for more information.

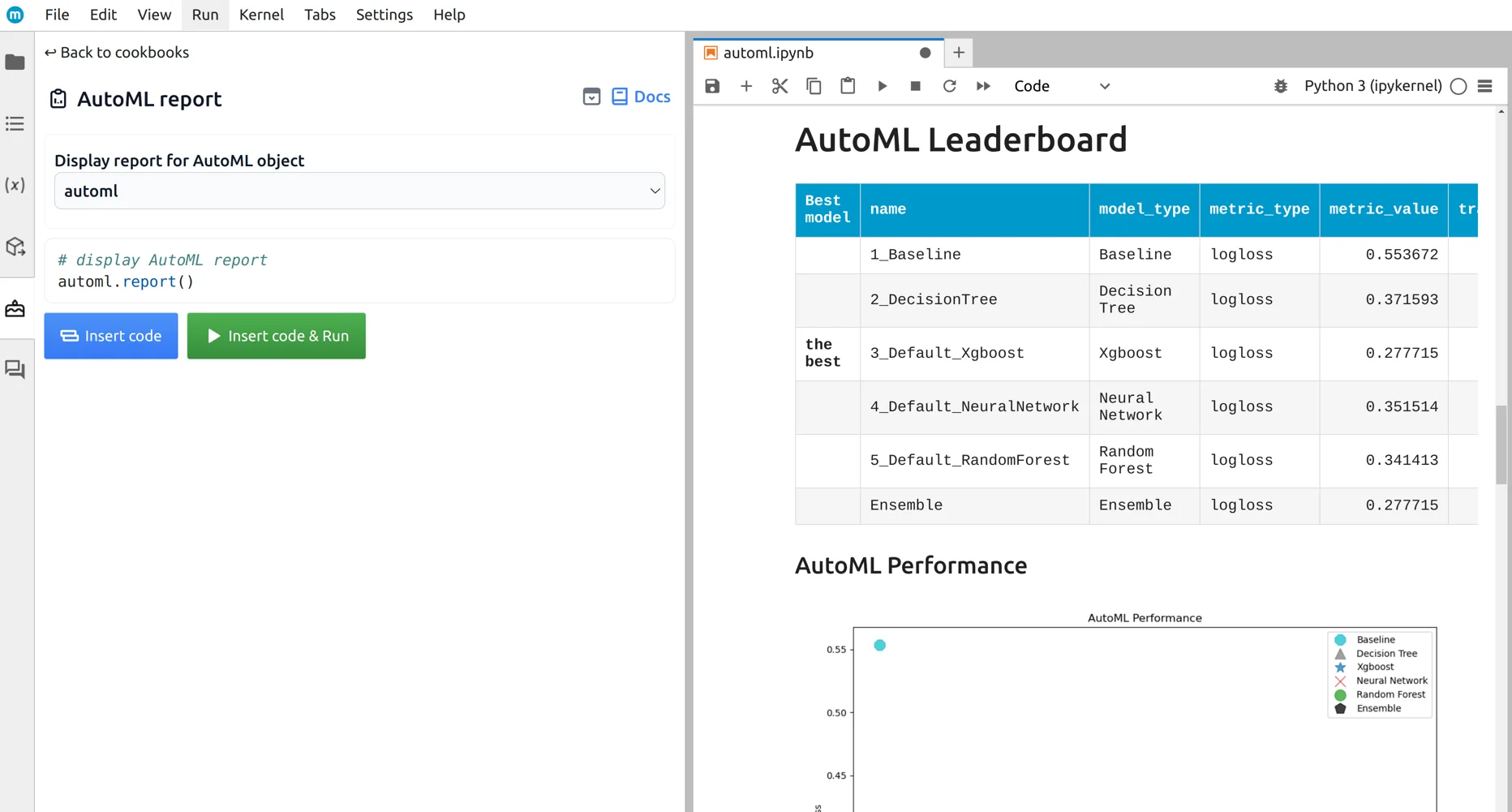

Each model trained by mljar-supervised has automatically generated documentation, making it easy to analyze its performance. After training, we can display a leaderboard to compare different models and check their metrics.

# display AutoML report automl.report()

Additionally, we can explore detailed reports for each model directly inside the notebook. This helps in understanding how different algorithms performed and selecting the best one for deployment.

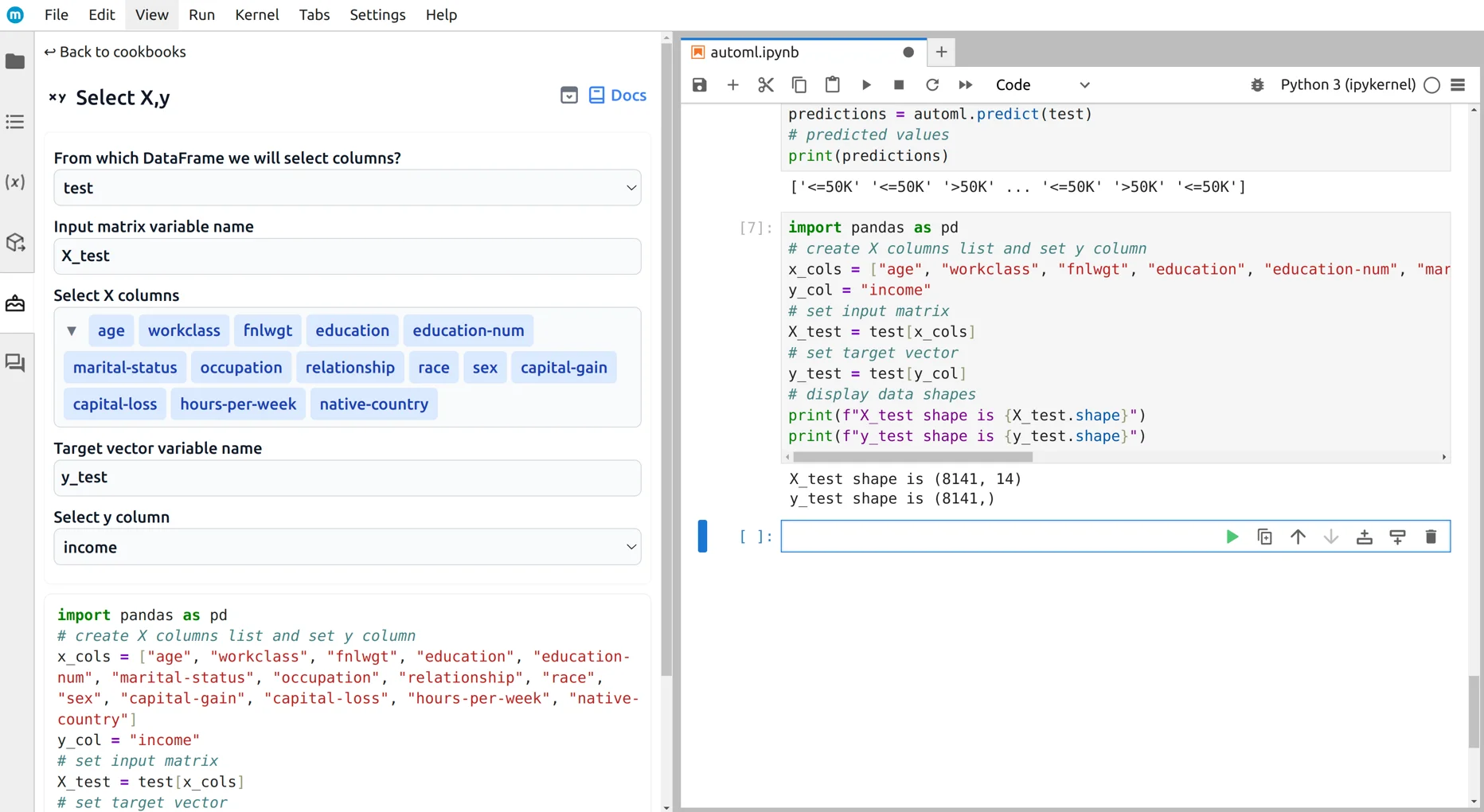

AutoML predicts on test data

After training the AutoML model, we need to prepare the test data for evaluation. Just like with the training set, we select input features (X_test) and the target variable (y_test). The code below extracts X_test and y_test from the test dataset and prints their shapes.

import pandas as pd # create X columns list and set y column x_cols = ["age", "workclass", "fnlwgt", "education", "education-num", "marital-status", "occupation", "relationship", "race", "sex", "capital-gain", "capital-loss", "hours-per-week", "native-country"] y_col = "income" # set input matrix X_test = test[x_cols] # set target vector y_test = test[y_col] # display data shapes print(f"X_test shape is {X_test.shape}") print(f"y_test shape is {y_test.shape}")

This step ensures that the test data is properly formatted for evaluating the trained AutoML model.

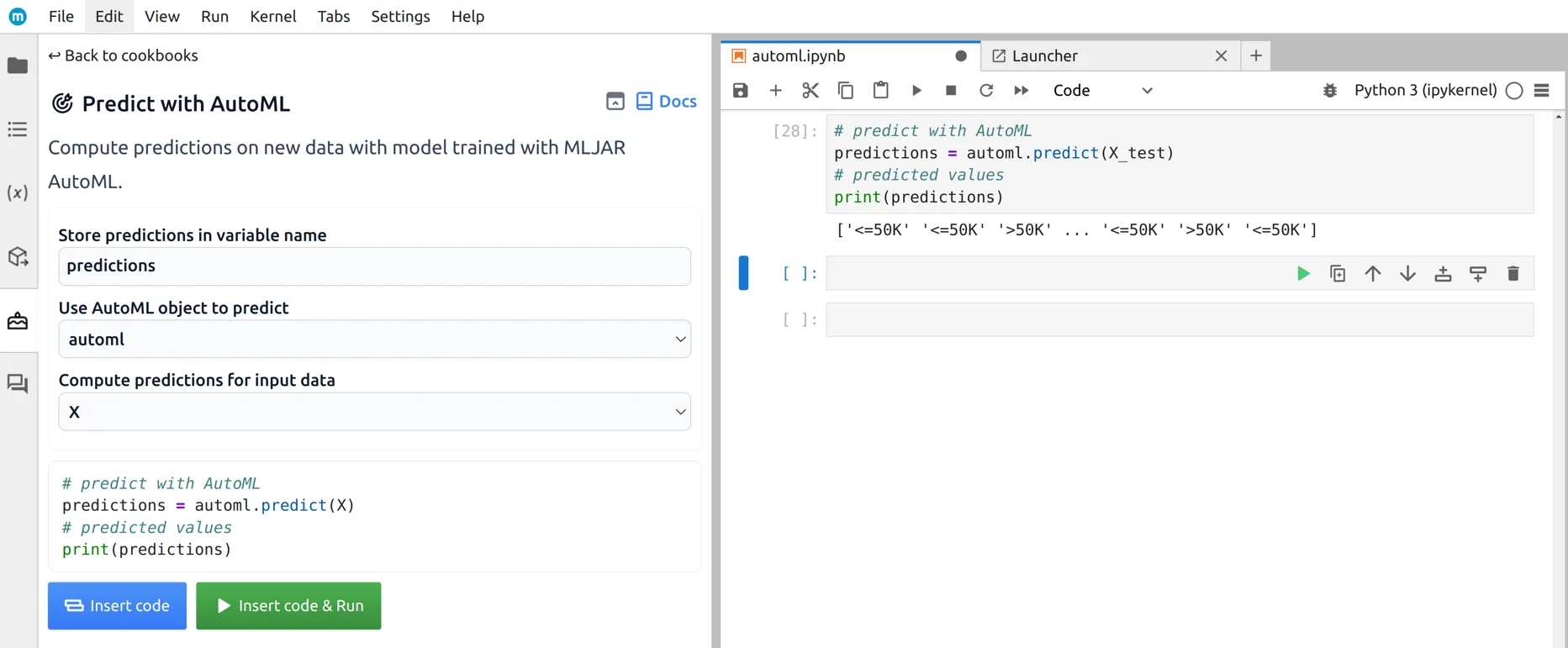

To get predictions from the trained AutoML model, we use the Predict with AutoML functionality. Predictions can be generated directly in the notebook by passing the trained AutoML object and test data (X_test). Additionally, in MLJAR Studio, we can select the AutoML object and input data using the graphical interface for an interactive experience.

# predict with AutoML predictions = automl.predict(X_test) # predicted values print(predictions)

This allows us to quickly evaluate model performance and integrate predictions into further analysis or applications.

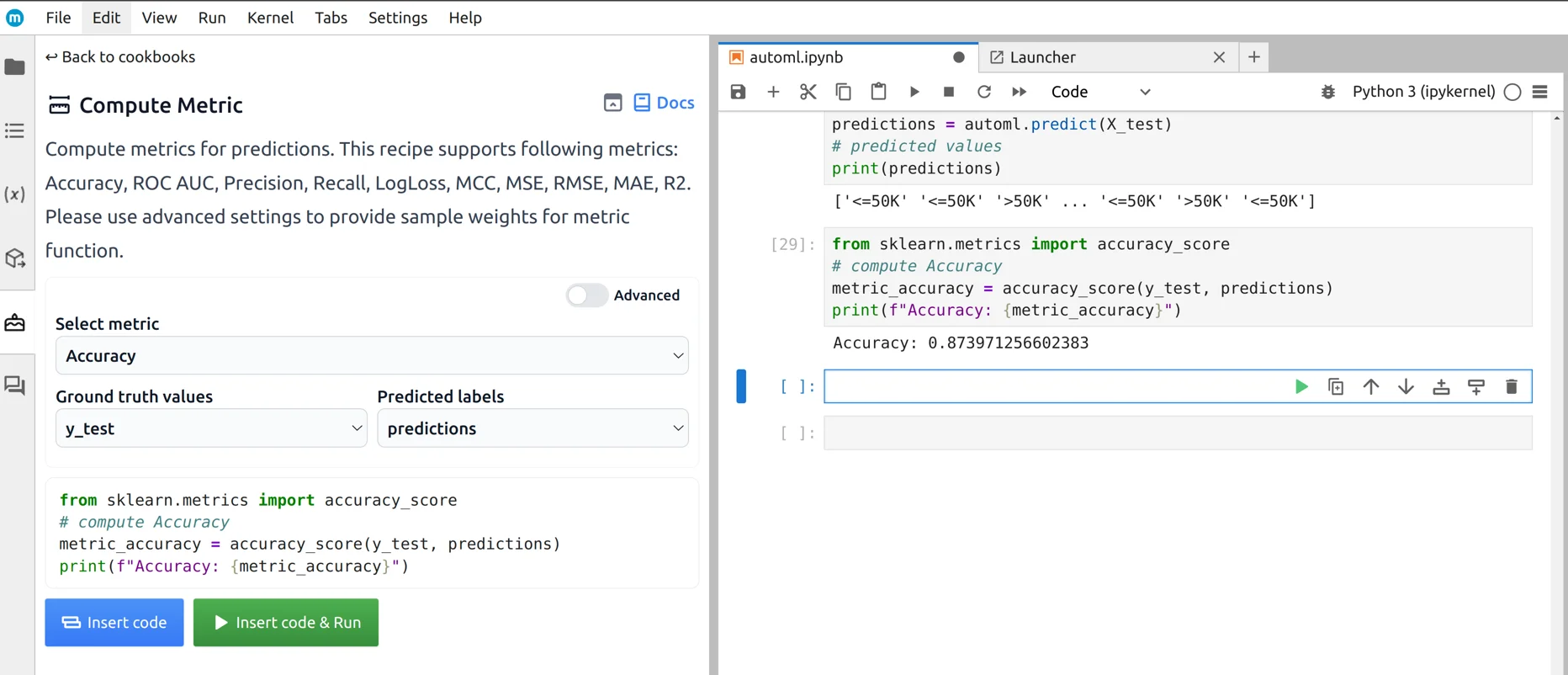

To evaluate the model's performance, we can use Compute Metric recipe. It uses accuracy_score from scikit-learn. This metric measures how well the model's predictions match the actual test labels (y_test). The code below calculates and prints the accuracy:

from sklearn.metrics import accuracy_score # compute Accuracy metric_accuracy = accuracy_score(y_test, predictions) print(f"Accuracy: {metric_accuracy}")

This helps assess how well the trained AutoML model generalizes to unseen data.

Summary

AutoML simplifies the process of building machine learning models by automating key steps like algorithm selection, hyperparameter tuning, and evaluation. In this article, I introduced 10 popular datasets for machine learning, covering binary classification, multiclass classification, and regression tasks. Each dataset is explained in detail, highlighting real-world applications such as credit scoring, medical diagnosis, and housing price prediction. I used mljar-supervised, an open-source AutoML tool, to train models efficiently in Python notebooks and demonstrated how it works in MLJAR Studio, with graphical interface. The article walks through the complete AutoML workflow, from loading data, splitting into train and test sets, selecting features, training models, making predictions, and evaluating results. Additionally, I showed how to generate reports and analyze models inside a notebook. A models leaderboard helps compare different models trained by mljar-supervised. All datasets used in the examples are available in my GitHub repository github.com/pplonski, making it easy for readers to explore and experiment with AutoML in their own projects.

If you are interested in trying MLJAR Studio, here are some useful links:

✅ Read more on GitHub: https://github.com/mljar/studio

✅ Download for free: https://platform.mljar.com

✅ Watch tutorial videos on YouTube: https://youtube.com/@mljar

💡 Stay connected:

🔹 Connect with me on LinkedIn: https://linkedin.com/in/piotr-plonski-mljar/

🌟 Support our open-source project!

If you find mljar-supervised helpful, please give us a star on GitHub: https://github.com/mljar/mljar-supervised 🚀✨

About the Author

Related Articles

- AutoML Open Source Framework with Python API and GUI

- Variable Inspector for JupyterLab

- Use ChatGPT in Jupyter Notebook for Data Analysis in Python

- ChatGPT for Advanced Data Analysis in Python notebook

- 4 ways for Exploratory Data Analysis in Python

- What is AI Data Analyst?

- Navy SEALs, Performance vs Trust, and AI

- New version of Mercury (3.0.0) - a framework for sharing discoveries

- Zbuduj lokalnego czata AI w Pythonie - Mercury + Bielik LLM krok po kroku

- Build chatbot to talk with your PostgreSQL database using Python and local LLM