8 Open-Source AutoML Frameworks: How to Choose the Right One

Building a good Machine Learning pipeline requires a lot of experimentation. There is no one-size-fits-all solution when choosing a Machine Learning algorithm. This applies not only to the learning algorithm itself but also to data processing. To make things even more challenging, most learning algorithms have many parameters—called hyperparameters—that also need to be selected. The number of possible solutions is endless. Everyone wants to find the best solution in the shortest time possible.

To make this process easier, people have developed software that automates experimentation. This is known as Automated Machine Learning, or AutoML. AutoML systems can choose algorithms, tune their hyperparameters, and handle data preprocessing and feature engineering. These systems can be very powerful.

AutoML frameworks help us select the right algorithms, but now there are so many AutoML frameworks available. So, who will help us choose the right AutoML framework? In this article, I’ll do a quick review of open-source AutoML solutions. Don't worry—although there are many AutoML projects on GitHub, most of them have been abandoned.

For some background, I’ve been working on AutoML systems since 2016, and I’m the author of one open-source AutoML package included in this list.

1. Auto-Weka

AutoML software began emerging in the 2010s. One of the earliest packages, Auto-WEKA, was released in 2012. Built on WEKA, it was developed by researchers at the University of British Columbia. A Python wrapper is also available.

The source code is available on GitHub, but the project is no longer maintained.

Auto-WEKA used Bayesian optimization to automate algorithm selection, hyperparameter tuning, and feature selection. All models came from the WEKA library. It was a pioneering AutoML tool, influencing later frameworks like Auto-sklearn and TPOT.

2. TPOT: Genetic Algorithm-Based AutoML

The next package is TPOT, developed by Epistasis Lab at Cedars-Sinai. It uses a genetic algorithm to search for the optimal combination of learning models, hyperparameters, and feature preprocessing techniques. TPOT is implemented in Python, with its source code available on GitHub. The first release came out in 2015, but the project is no longer actively developed. The authors are now working on a successor, TPOT2.

Originally designed for biomedical data, TPOT can be used for a wide range of applications. It is frequently used in AutoML research as a strong baseline for comparing new methods. However, because it relies on genetic algorithms, finding a good solution can take a long time.

3. Auto-sklearn

Auto-sklearn is an AutoML framework built on top of scikit-learn. Developed by researchers from Freiburg University, it was first introduced in 2015. Auto-sklearn uses Bayesian optimization to efficiently search for the best combination of models and hyperparameters. Additionally, it incorporates meta-learning, meaning it learns from previous experiments to speed up the search process. The source code is available on GitHub, though the repository appears abandoned—the last release was in 2023.

Auto-sklearn introduces a approach to automated feature preprocessing and model ensembling, optimizing these steps to improve predictive performance. Its meta-learning capabilities allow it to learn from past experiments, making the search for the best models and hyperparameters more efficient. The framework automatically builds and selects ensembles, often resulting in stronger, more robust predictions. Auto-sklearn remains a widely used benchmark in AutoML research, serving as a reference point in academic studies and machine learning competitions.

4. MLJAR

I am the creator of MLJAR AutoML, a user-friendly and adaptable AutoML framework. It was first introduced in 2016 as a closed-source web service. After a major redesign in 2019, it became open-source under the MIT license, with the source code available on GitHub. The goal was to build an accessible yet powerful AutoML system that caters to users with different needs.

MLJAR AutoML provides four operation modes, allowing users to tailor the automation process:

- Explain – Focuses on model interpretability, useful for exploratory analysis.

- Perform – Balances predictive performance and computational efficiency.

- Compete – Uses extensive hyperparameter tuning and ensembling for maximum accuracy.

- Optuna – Leverages the Optuna framework for targeted optimization of selected algorithms.

The framework automatically generates documentation in Markdown or HTML, making it easy to review model performance and workflow details. In 2023, a fairness module was added to detect and mitigate bias in models, a feature that sets MLJAR AutoML apart from other AutoML frameworks.

Under the hood, MLJAR AutoML employs a heuristic approach to model selection, combining random search with hill climbing. It builds a library of models and ensembles them to enhance predictive accuracy.

5. H2O AutoML

H2O was first released in 2012 by H2O.ai (formerly known as 0xdata). Over the years, the open-source H2O-3 platform was developed, which includes H2O AutoML, introduced around 2017. The source code is available at GitHub. H2O AutoML uses random search for hyperparameter optimization, making it simpler than Bayesian or evolutionary approaches.

H2O AutoML automates model training, tuning, and ensembling without requiring users to specify configurations. It supports a wide range of models, including gradient boosting machines (GBM), random forests, deep learning models, and generalized linear models (GLM). One of its strengths is scalability, as it can handle large datasets efficiently and takes advantage of multi-threaded processing. It also provides built-in model explainability, generating reports on feature importance and model performance.

H2O AutoML can be accessed via R, Python, and a web-based UI, making it accessible to both developers and analysts. The platform continues to be actively developed.

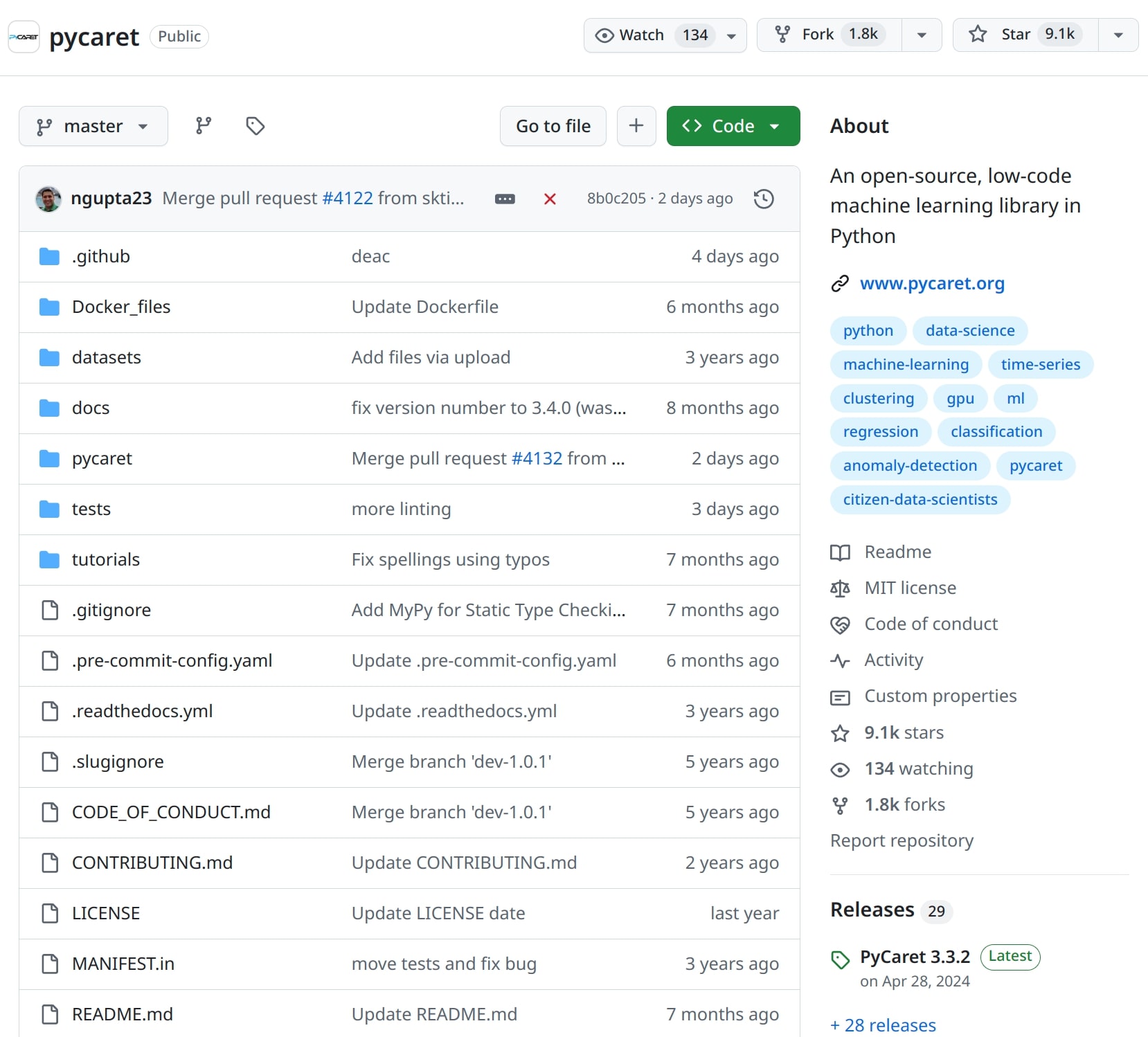

6. PyCaret

PyCaret is a low-code AutoML library designed to simplify machine learning workflows for both beginners and experienced practitioners. It provides an easy-to-use interface that automates data preprocessing, model selection, hyperparameter tuning, and ensembling. One of its key advantages is its quick setup, allowing users to build machine learning models with just a few lines of code. The source code is available on GitHub, and the project is actively maintained.

PyCaret supports a wide range of machine learning tasks, including classification, regression, clustering, and anomaly detection. It integrates well with scikit-learn, XGBoost, LightGBM, CatBoost, and other popular libraries, making it a flexible tool for rapid experimentation. Although it is designed for ease of use, its automated preprocessing and ensembling techniques often make it slower compared to lightweight AutoML frameworks. Despite this, PyCaret remains a popular choice for those who want a quick and efficient way to build machine learning models without deep technical expertise.

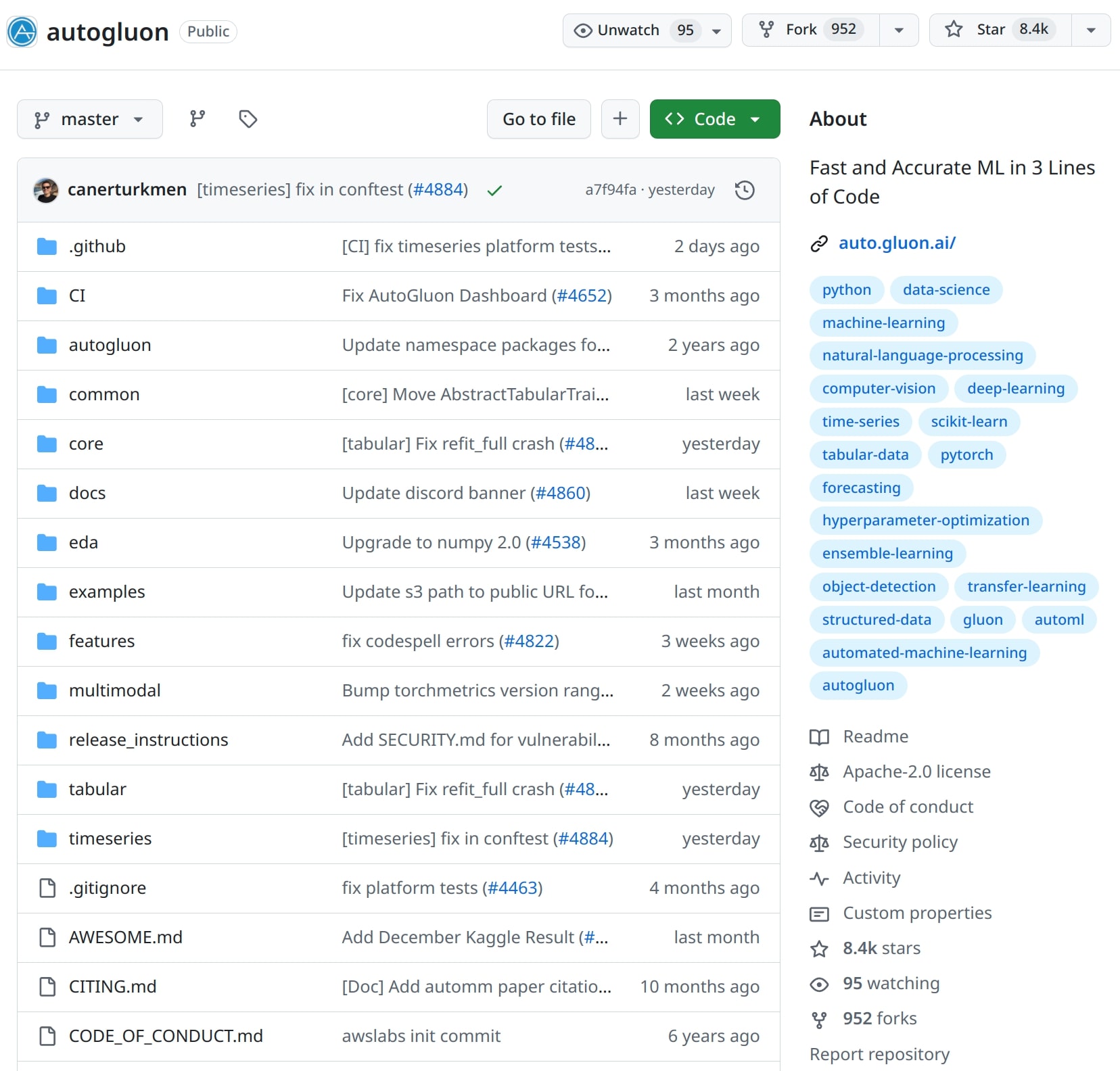

7. AutoGluon

AutoGluon was introduced by Amazon Web Services (AWS) AI in 2019. It was designed as an open-source AutoML framework that simplifies the training of high-performance machine learning models across different types of data, including tabular data, text, and images.

AutoGluon stands out for its multi-layered model ensembling, automated hyperparameter tuning, and deep learning integration, making it a powerful tool for both beginners and experienced machine learning practitioners. It automatically selects models and optimizes them, requiring minimal user intervention. One of its key advantages is its ability to leverage deep learning models, particularly for tasks involving text and images. Unlike many AutoML frameworks that focus primarily on tabular data, AutoGluon provides end-to-end solutions for multimodal learning. It is also designed to be efficient and scalable, making it well-suited for both research and production environments.

The source code is actively maintained and available on GitHub.

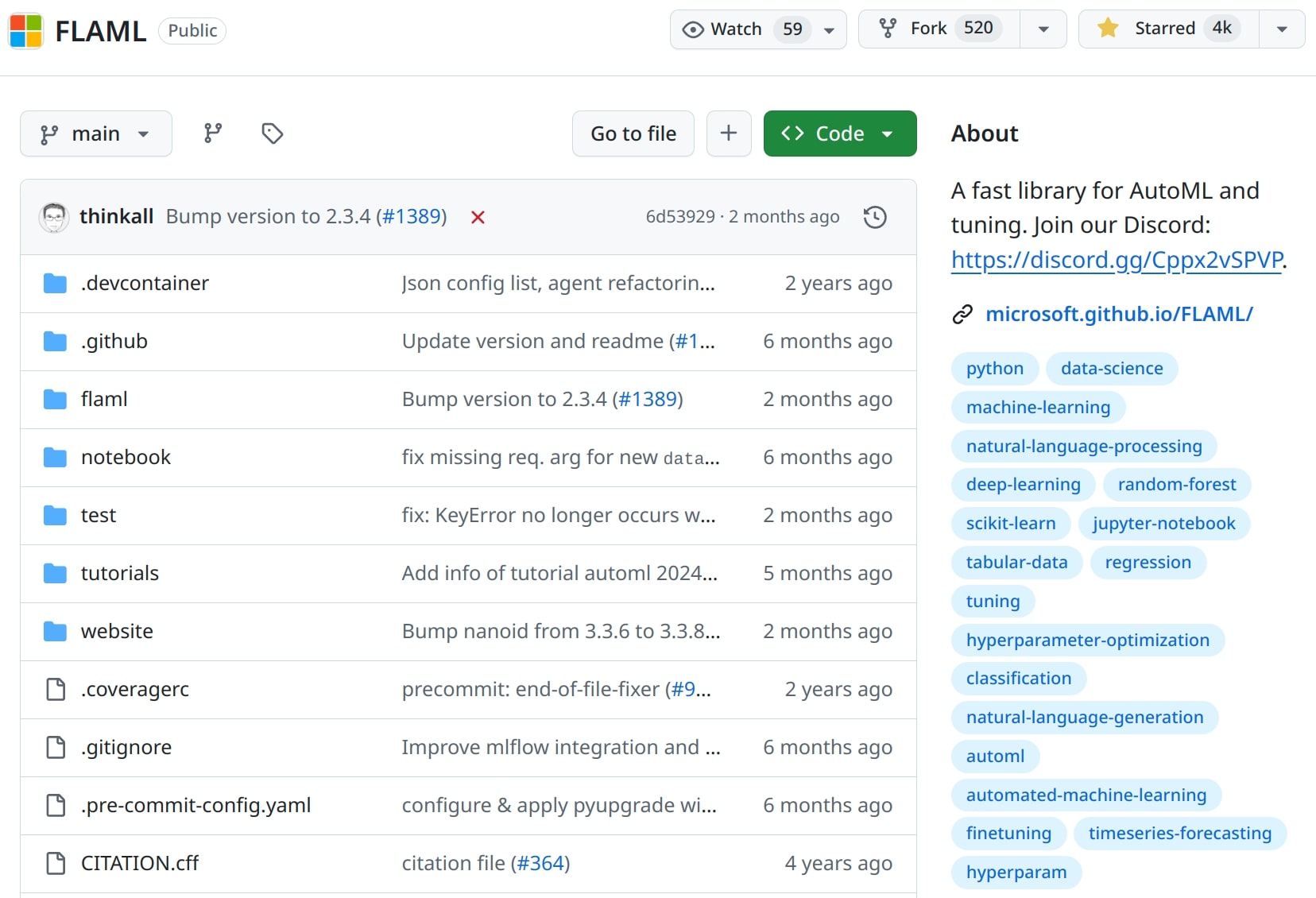

8. FLAML (Fast Lightweight AutoML)

FLAML (Fast Lightweight AutoML) was introduced by Microsoft Research in 2020. It was designed as a lightweight and efficient AutoML solution that focuses on delivering high-performance models with minimal computational cost. FLAML uses cost-effective hyperparameter optimization techniques, making it particularly suitable for scenarios where speed and efficiency are critical.

Unlike many AutoML frameworks that rely on exhaustive searches, FLAML employs budget-aware optimization strategies, allowing it to find strong models faster and with fewer resources. It supports a wide range of tasks, including classification, regression, time series forecasting, and NLP. FLAML is also well-integrated with popular machine learning libraries, such as scikit-learn, XGBoost, LightGBM, and Transformers. Due to its efficiency, FLAML is often used in low-resource environments, such as cloud-based applications and edge computing.

The project is actively maintained, and the source code is available on GitHub.

Honorable Mentions

automl-gs

One of the most interesting features of automl-gs is that it first generates a Python script containing the machine learning pipeline before executing it. This allows users to review and modify the code, making it more transparent compared to fully automated solutions. The source code is available at GitHub and was developed by @minimaxir. Unfortunately, the project is no longer maintained. Despite this, it remains a simple and useful tool for quickly prototyping AutoML workflows.

AutoKeras

When AutoKeras was first released, it was dubbed the "Google AutoML killer" due to its promise of making deep learning more accessible and automated. It serves as a neural architecture search (NAS) framework, automatically designing the optimal neural network for a given task. Developed and maintained by the DATA Lab at Texas A&M University, AutoKeras provides a high-level API that integrates well with Keras and TensorFlow. The source code is available at GitHub. It supports tasks such as image classification, text classification, and structured data processing, making it a versatile tool for deep learning automation.

Summary

AutoML is designed to help us select the best algorithm for our machine learning pipeline, but with so many AutoML frameworks available, a new challenge arises—which one should we use? Fortunately, the answer can be quite simple. Choose an actively maintained AutoML framework and ensure that you can easily install it on your machine. Some AutoML frameworks come with many dependencies, making installation difficult, so opting for a tool that works seamlessly on your setup is a practical approach. If you can install multiple frameworks (well done, hero!), pick the one that provides detailed insights into the machine learning pipeline, so you don’t end up with a black-box model.

While reviewing these AutoML frameworks, I noticed a rather unfortunate pattern—AutoML developers tend to fall into three groups:

- Academia-based researchers – They develop and maintain AutoML projects only during grant-funded periods. Once funding ends, the project is often abandoned.

- Developers from large corporations – They work on AutoML projects that are actively maintained with corporate resources.

- Independent developers from small companies (like me!) – They build and maintain AutoML frameworks out of pure passion for machine learning.

Alright, it’s almost 7 AM, so I’m wrapping up this article! If you have any questions or comments, feel free to reach out to me at piotr [at] my company domain. All the best! 😊

About the Author

Related Articles

- How to become a Data Scientist?

- 6 best packages for data visualization in Python

- How to install packages in Python

- The future of no code data science

- Programming Languages for Data Science

- LightGBM predict on Pandas DataFrame - Column Order Matters

- 2 ways to install packages in Jupyter Lab

- 2 ways to delete packages in Jupyter Lab

- 2 ways to list packages in Jupyter Lab

- My top 10 favorite machine learning algorithms