Navy SEALs, Performance vs Trust, and AI

Recently, I watched a presentation by Simon Sinek about how the U.S. Navy SEALs select members for their elite teams (YouTube video, Why Trust is Key to High-Performing Teams). What struck me was the way they balance two qualities: performance and trust. Performance is all about how well someone does on the battlefield, under pressure, in life-or-death situations. Trust, on the other hand, is about how they behave off the battlefield-how they treat others, how much you can rely on them, and whether you feel safe with them outside of combat.

He illustrated this beautifully with a quote:

I may trust you with my life, but do I trust you with my money and my wife?

It's important to remember: the Navy SEALs are not just any team-they're high performing teams, as Simon says, they are "the top of the top of the top." When stakes are highest, the importance of trust is most clear.

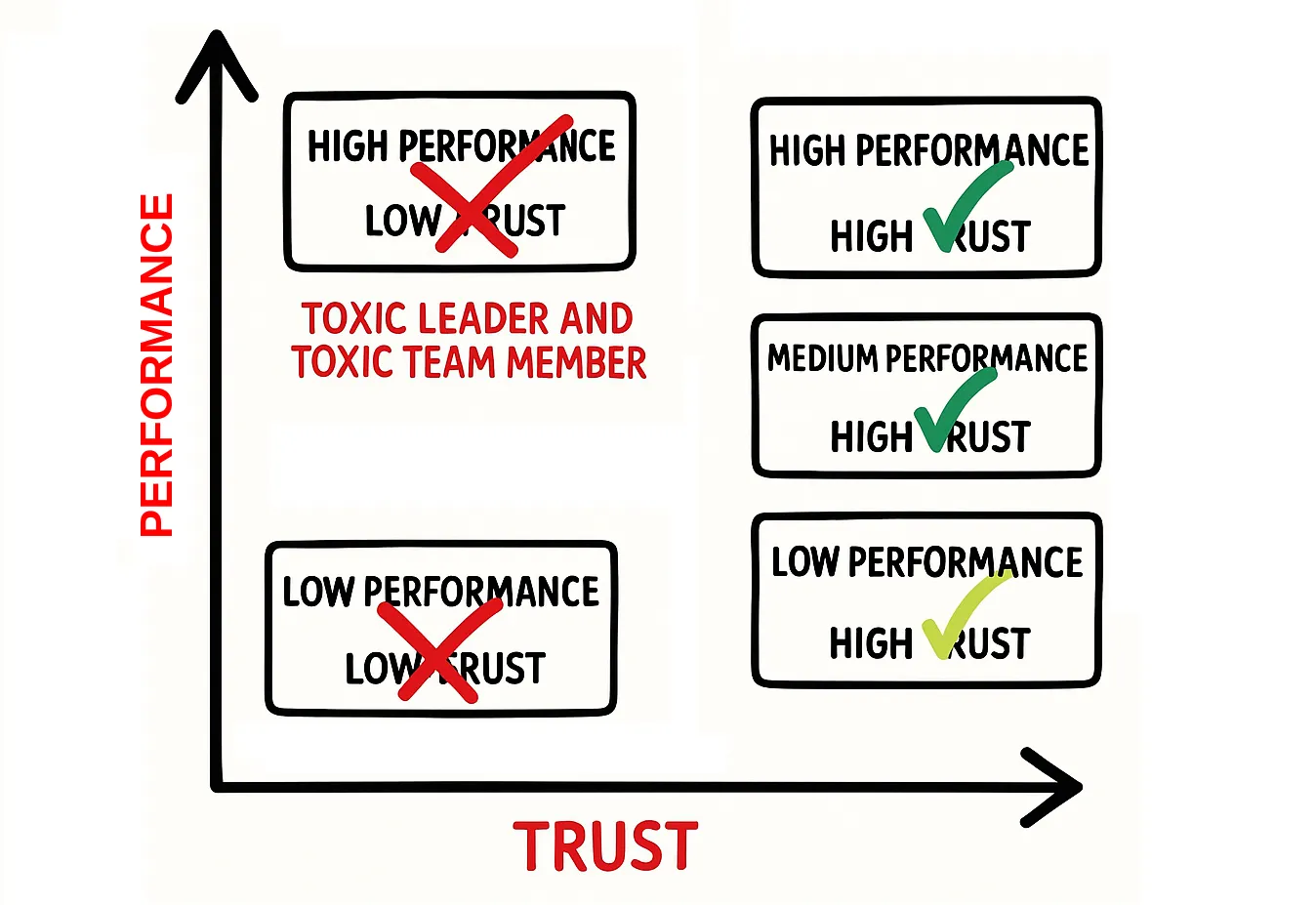

Sinek presented a simple but powerful chart: performance on one axis, trust on the other. In this context, performance refers to skills - how well someone executes their core tasks, follows commands, and performs under pressure. Trust, meanwhile, is all about character: how reliable someone is, how well they support their teammates, and how much others feel safe relying on them.

Of course, everyone wants the person who scores high on both axes - someone with top skills and a high level of trustworthiness. These are the rare individuals who become the foundation of high-performing teams.

But Sinek emphasized something surprising: the Navy SEALs would rather take someone with medium or even low performance, as long as they have high trust. The reason is that skills can be trained and improved over time with practice and support. However, trust and character are much harder to develop - if they can be developed at all.

Conversely, those with high performance but low trust are actively avoided, no matter how talented they are. These individuals can be toxic and creating risk, especially in high-stakes situations.

In other words, while skills and performance are necessary, it's character and trust that truly define a strong team. That’s why Navy SEAL training is so tough - not just to identify the best performers, but to find those who are both capable and trustworthy.

What Does This Have to Do With AI?

I think this analogy applies to software development today, especially as we bring artificial intelligence into our teams and workflows. Right now, AI tools can deliver medium to high performance on many technical tasks. They can generate code, analyze data, and even help build entire applications - sometimes with impressive speed and accuracy.

But when it comes to trust, the situation is completely different. The truth is, trust with AI is not just low - it's zero. AI doesn't care about your project, your team, or your users. It has no sense of loyalty, no ethics, and no genuine understanding of responsibility. What is more, if you want your AI assistant to follow a conversation, you have to supply the entire previous messages in the context, every time. There's no real memory, no long-term commitment, and no one to rely on if things go wrong.

Limitations of LLMs: Why AI Isn't Trusted

Andrej Karpathy addressed this topic directly in his recent talk at YC Startup School (watch Andrej Karpath presentation). He pointed out three main reasons why large language models (LLMs) like today's AI systems cannot be fully trusted:

-

Hallucinations and factual errors: LLMs sometimes produce answers that sound confident but are simply wrong or made up.

-

Lack of persistent memory: LLMs don't remember past interactions. Every conversation is like starting fresh, unless you explicitly feed the entire history as context.

-

Jagged intelligence: AI is incredibly strong in some areas (like writing code snippets or summarizing text) but surprisingly weak in others, and these boundaries aren't always obvious.

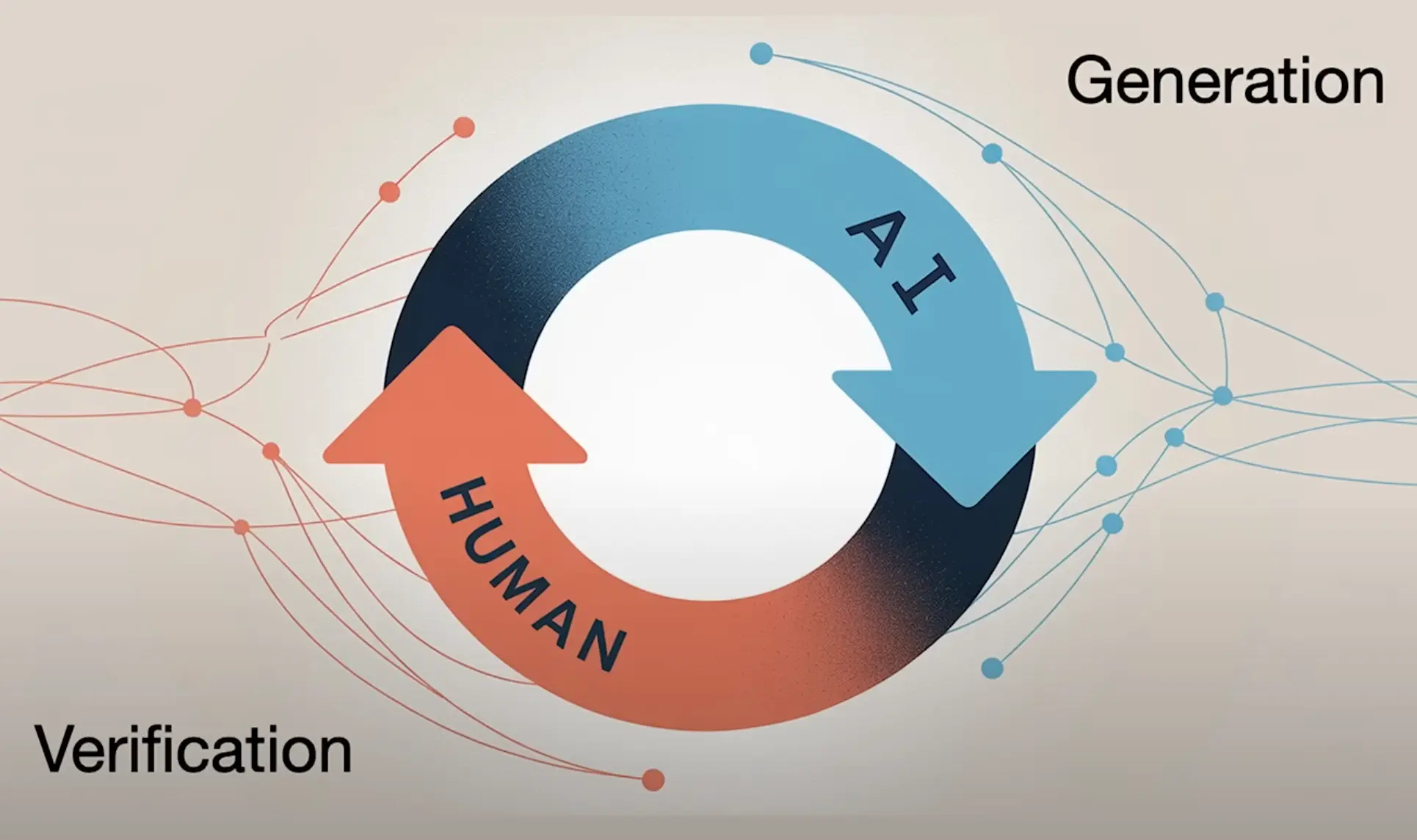

This means that while AI can help us build software faster, it can't be left alone. There still needs to be a human in the loop - a person who can verify, approve, and take responsibility for the AI's output. Below is the generate-verify loop, as shown in Andrej Karpathy's presentation. He emphasized that the verification step should be easy and fast to be effective. To make this possible, we need tools that make applying trust simple and efficient - and I completely agree with this approach.

Chasing Performance, Forgetting Trust

Right now, the race in AI is all about performance. Faster, smarter, more accurate-everyone is chasing the next breakthrough. But maybe we should also be thinking about trust. When we talk about building autonomous AI agents, we don't just want them to be capable. We want to trust them.

The distinction between "generate" (AI creating content or solutions) and "verify" (human checking and approving the result) is something Andrej Karpathy emphasizes in his talk. But it also perfectly resonates with Simon Sinek's message about the Navy SEALs: high performance is great, but without trust, it's not enough. What is more, it is toxic.

Conclusion

Maybe the lesson from the Navy SEALs is that, in both human teams and AI systems, trust isn't a "nice to have"-it's essential. As we build the next generation of AI tools and agents, we should ask not just how well do they perform, but also how much can we trust them?

About the Author

Related Articles

- Use ChatGPT in Jupyter Notebook for Data Analysis in Python

- ChatGPT for Advanced Data Analysis in Python notebook

- 4 ways for Exploratory Data Analysis in Python

- AutoML Example Projects: A Guide with 10 Popular Datasets

- What is AI Data Analyst?

- New version of Mercury (3.0.0) - a framework for sharing discoveries

- Zbuduj lokalnego czata AI w Pythonie - Mercury + Bielik LLM krok po kroku

- Build chatbot to talk with your PostgreSQL database using Python and local LLM

- Build a ChatGPT-Style GIS App in a Jupyter Notebook with Python

- Private PyPI Server: How to Install Python Packages from a Custom Repository